Wednesday, December 26, 2007

WebSphere Process Server and ESB 6.1 Available

Links to more info:

WebSphere Process Server 6.1 new features

WebSphere Enterprise Service Bus 6.1 new features

WebSphere Integration Developer 6.1 new features

Enjoy!

Tuesday, November 20, 2007

WebSphere SIP Performance and Enterprise QoS helps AT&T

Another effort from the my performance team members is documented in the latest IBM press release.

This effort shows how:

- WebSphere Application Server is leading the way in critical new technologies (SIP)

- The WebSphere Application Server has performance that is not only leading, but also carrier-grade while being enterprise capable - including High Availability.

- Our commitment to driving open and trusted industry standard benchmarking

In WebSphere Application Server 6.1, we added support for the IETF Session Initiation Protocol (SIP). SIP is a request/response protocol for negotiating communication between two endpoints that has been widely adopted in the telecommunications industry. SIP is useful in Voice Over IP (VOIP), web click to call, conferencing, interactive voice response systems, instant messaging, and presence applications.

Below is a basic example of a session initiation between two parties. It shows a swim lane diagram of user@ibm.com starting a discussion with user@example.com with proxies inline to negotiate the session.

In the press release, we announce how AT&T plans to use our SIP technology:"AT&T is using IBM WebSphere Application Server and BladeCenter systems as a Session Initiation Protocol (SIP) service logic execution environment platform to develop mission-critical services for deployment on AT&T's IP-based network."

The features needed to support AT&T include not only SIP, but also technology that makes such latency dependent applications such as VOIP possible, along with enterprise class high availability. Also, performance is quite important. Also mentioned in the press release, we detail an internal SIP benchmark we ran along with the results:"IBM's WebSphere Application Server delivers telecom class infrastructures with a focus on SIP features. It is a carrier-grade application server that utilizes a converged HyperText Transfer Protocol (HTTP) and SIP container. It allows service providers to quickly offer new personalized and productivity enhancing services that subscribers demand. WebSphere Application Server 6.1.0.11 using Red Hat Linux and integrated in the IBM BladeCenter HT chassis, which is network equipment building systems (NEBS) compliant, has achieved an industry leading SIP performance measurement of 1296 calls per second, using a 13 message SIP call model (with 80 second hold time) which translates to over 4.6 million busy hour call attempts per blade. Using this call model, in a high availability, carrier-grade configuration, WebSphere Application Server achieved 660 calls per second per blade with session replication. These results were achieved while retaining extremely low end-to-end SIP message processing latency of under 50 milliseconds ninety five percent of the time. This exhibits the ability of WebSphere Application Server to handle the call volumes businesses demands while ensuring service quality."

What does this mean?

It means that our converged web container (supporting typical HTTP servlets, JSR 168 portlets, and now JSR 116 SIP Servlets) can perform SIP operations at carrier class speeds. We demonstrated this using an internally developed SIP call model benchmark. The benchmark is similar to benchmarks being used externally by other SIP platform vendors, but only IBM added high availability to the benchmarking scenario. IBM shows that we lead in performance where it counts – in enterprise ready high availability scenarios.

Based on my previous posts, you would assume we'd want to push SIP benchmarking to standardized benchmarking organizations so the measurements can be trusted by customers. Well, we are doing exactly that! IBM, along with other vendors, has formed a subcommittee at SPEC to develop an industry SIP benchmark. Erich Nahum (IBM) is chairing the group and has requested public feedback on the current proposal.

If you'd like to find out more about SIP, Erik Burckart has a two part article on SIP containing both an introduction to SIP as well as a guide to developing converged SIP applications.

Congrats again to my most excellent performance team members.

Update: Telephony Online coverage that includes comments on SIP performance.

Thursday, November 15, 2007

The wonders of modern hardware

http://video.google.com/videoplay?docid=5125780462773187994

Tim Francis

Tuesday, November 6, 2007

Rational Application Developer

The RAD 7.5 beta also provides support for the WebSphere EJB3 feature pack, the beta for which is available now for WebSphere 6.1. The RAD support for EJB 3.0 allows us to really exploit annotation based programming models. Previous versions of the spec focused on deployment descriptors, and the matching RAD releases had advanced editors to support that. In RAD 7.5, the focus is on Java developers, and using the Java editor as a base - but extending the already powerful Eclipse editor by adding significant new function and capability to support developing an annotation based application.

The intent is simple; most developers creating applications for WebSphere are quite comfortable using Java, and we have a great Java editor in RAD/eclipse - so it's a mistake to force them to switch to a whole new environment (with wizards and graphical editors) when they want to define some aspect of server interaction (such as Web Services or EJBs). This is a natural extension to what the spec defines, but the way we're supporting that pattern within RAD is new, easy to use, and productive.

The other aspect that I'm thrilled about is just the fact that we're running an open beta, less than a year after we shipped the last major version of RAD. We're not done yet, and we have more good ideas that we're still implementing - but the code is great quality, and the performance is good... and this shows a commitment to delivering a rock solid, robust, and well performing RAD. The intent is to encourage developers to explore the product, and not feel concerned that they'll get in trouble if they try something non-standard or off the golden path.

I'm really pleased with the progress the team has made on RAD 7.5; this is going to be the best version of RAD we've ever delivered, and the open beta gives you a chance to see where we're at, comment on what you see, and help us refine the product to make it exactly what you need. You can sign up for the beta here, and there will be newsgroups available to report problems, ask questions, and give us your feedback.

Tim Francis

Monday, November 5, 2007

InfoQ interview on IBM SPECjAppServer leadership and benchmarking in general

http://webspherecommunity.blogspot.com/2007/10/websphere-beats-bea-and-oracle.html

Here is the interview:

http://www.infoq.com/news/2007/11/ibm-specj

Feel free to post follow-up questions here or on the InfoQ forums.

InfoQ is an excellent news site for tracking important news in enterprise computing.

SPEC is a non-profit organization that establishes, maintains and endorses standardized benchmarks to measure the performance of the newest generation of high-performance computers. Its membership comprises leading computer hardware and software vendors, universities, and research organizations worldwide. For complete details on benchmark results and the Standard Performance Evaluation Corporation, please see www.spec.org/. Competitive claims reflect results published on www.spec.org as of October 03, 2007 when comparing SPECjAppServer2004 JOPS@Standard per CPU Core on all published results.

Thursday, October 4, 2007

WebSphere Beats BEA and Oracle Performance By Over 37%

"A powerful IT infrastructure is also required to support the growing number of transactions across the enterprise, as well as with customers, partners and suppliers. IBM recently beat competitors by 37 percent in an industry benchmark that measures high-volume transaction processing that are typical in today's customer environments. IBM WebSphere Application Server established record-breaking SPECjAppServer2004 benchmark performance and scalability results involved more than 15,500 concurrent clients and produced 1,197.51 SPECjAppServer2004 JOPS@Standard (jAppServer Operations Per Second), which translates into more than 4.3 million business transactions over the course of the benchmark's hour long runtime."

This shows our commitment to standard benchmarking organizations as I described in my last blog post. By leading this benchmark that was designed and approved by all participating SPEC vendors, IBM customers truly benefit from the demonstrated performance leadership.

We chose to lead in a category that matters most to our customers - per core performance. If you look at the result and the number of J2EE server cores and divide it out, we lead Oracle by 37% and smash BEA by 56% with approximately 300 JOPS/core. Comparing per core (CPU) normalized performance is really the best way to compare one application server and hardware stack to another. This demonstrates IBM's leadership of the industry in J2EE performance without question.

This result also shows the combined power of an IBM stack. Specifically, we achieved this by running WebSphere Application Server v6.1 on the IBM 5.0 JVM on AIX 5L V5.3 with an IBM POWER6 p570 server powered by two dual-core IBM® POWER6® 4.7 GHz processors against IBM DB2 Universal Database v9.1 running on a single POWER6-based server.

Congrats again to my team who yet again dominates where it matters - in customer focused standards based benchmarks.

SPEC is a non-profit organization that establishes, maintains and endorses standardized benchmarks to measure the performance of the newest generation of high-performance computers. Its membership comprises leading computer hardware and software vendors, universities, and research organizations worldwide. For complete details on benchmark results and the Standard Performance Evaluation Corporation, please see www.spec.org/. Competitive claims reflect results published on www.spec.org as of October 03, 2007 when comparing SPECjAppServer2004 JOPS@Standard per CPU Core on all published results.

Monday, October 1, 2007

A few pictures from London

Thursday, September 27, 2007

SCA Rock Rolls Forward

Congratulations!

Monday, September 24, 2007

Perspective in Benchmarks - My thoughts on Microsoft StockTrader

In my opinion, Microsoft uses their report to focus on three points

- Attempt to prove that Microsoft is interoperable with WebSphere software

- Attempt to prove Microsoft’s SOA relevancy and readiness for the enterprise

- Attempt to prove Microsoft performance is better than WebSphere software performance

I’d like to state up front that I was personally involved with the development of IBM’s Trade benchmark starting with Trade 3 and ending with Trade 6.1. Others on my team have made significant contributions to the code as well to ensure that Trade continues to be an credible and useful tool to express performance concepts and release to release enhancements to IBM customers for J2EE (Java EE) programming.

Some level of interoperability

I applaud any effort by Microsoft or any other software vendor in pursuit of interoperability. However, the interoperability the Microsoft report speaks to is basic web services functionality (SOAP/WSDL/XML) only. It does not focus on interoperability of transactions, security, reliability, durability and does not use industry standard schemas that many of our customers need for cross enterprise (B2B) or intra-enterprise web services interoperability with .NET clients calling WebSphere servers. Independent of the Microsoft report, Microsoft and IBM have already focused on interoperability of these higher value qualities of service in Web Services through industry standard WS-I efforts as shown here and here. I am very happy to see IBM and Microsoft continue to focus on standards based interoperability. I am confident that WS-I will continue to facilitate customer focused web services interoperability in these higher value web service functionalities.

Is Microsoft enterprise ready?

Press coverage has cited the Microsoft report as helping Microsoft prove it is ready for the enterprise. From our quick look at Microsoft code, this doesn’t seem to be the case. Many of the “enterprise ready” features stressed by the Microsoft report are hand coded into the application. Areas such as multiple vendor database support, load balancing, high availability, scalability, and enterprise wide configuration for services contribute to the significantly higher lines of code count in the Microsoft application as compared to the WebSphere implementation. You should equate higher lines of code count to more maintenance and support costs long term and think about the value of this being provided in the application server product versus in the application itself. Some of the increased lines of code are for comments, but the comments themselves point out how many places where Microsoft deviated from using framework classes and instead implemented custom extensions to fill in gaps of functionality lacking in their framework.

As an author of Trade, I must admit an embarrassing fact. Four years ago, my team added web services capability to Trade as an optional component – to demonstrate web services as an alternative to remote stateless session bean methods. Since that time, I personally have worked hand in hand with many enterprise customers adopting web services. We have found that the Trade approach to web services wasn’t the best. Specifically the fine grained “services” in Trade average around 400-800 bytes of passed data. As you’ll see in my recent blog post, industry standard schemas for B2B typically have much larger payloads. While Trade was a fun exercise for my team to learn web services, it in no way mirrors what we know now our customers have told us are good web service practices. Current SOA principles motivate more coarse gained services. The embarrassing fact is that we never have gotten around to removing the poor examples of web services usage from Trade. However, it is interesting to note that Microsoft did not recognize or point out the obvious flaws in these web service patterns during their analysis – they merely parroted what they saw.

Microsoft entitled this paper as “Service Oriented”. Later in the press, Microsoft alludes to this “Service Oriented” benchmark test to help draw credibility to Microsoft’s SOA strategy. Based on what I just said about Trade web services, you’ll see why IBM has never talked about Trade and web services to help customers understand web service performance. Meanwhile, this Microsoft report focuses on these trivial payload web services to prove they can support “Service Oriented” benchmarks. Follow-up posts and press coverage followed the “Service Oriented” title, proclaiming that this in some way helps Microsoft’s SOA strategy. Drawing that conclusion is incorrect. The industry agrees that SOA is more than trivial web services. SOA is about a business centric IT architecture that provides for a more agile and flexible IT support of business needs. Realistic web service usage is a cornerstone of SOA, but web services alone do not define SOA.

Is Microsoft performance better than WebSphere performance?

I believe Microsoft is trying to draw IBM out of our commitment to standard benchmarking organizations to confuse customers about performance. You can draw your own conclusions from specific comments apparently made by Greg Leake in press coverage, asking for IBM to “collaborate”. Some have commented correctly on community discussions that the Microsoft report isn’t specific enough in terms of topology, hardware, and tuning to make any sensible conclusions based on the performance data. It is a compelling story that this Microsoft report weaves – Microsoft beating IBM on its own benchmark. However, IBM didn’t run Trade as a benchmark in the way shown in the paper’s results. As a customer, you should always be careful how much you trust proprietary benchmark results produced by a single vendor. These things can always be coerced to create FUD and confusion.

We have reviewed the paper and results and found inconsistencies with the best practices for how to run WebSphere Application Server. Assuming items Microsoft chose not to document along with improvements in performance allowed by following best practices, we in fact believe that IBM WebSphere Application Server would win across all the scenarios shown in the results.

You may well ask, if WebSphere Application Server would win, why wouldn’t you say so and publish a contrary IBM report. I don’t believe publishing a proprietary view of performance would help our customers – for all the reasons stated above. At best, if IBM was to respond to this paper, you could expect both vendors to degrade to the lowest common coding styles for implementing the benchmark so they would “win”. As shown already by Microsoft’s implementation, Microsoft wouldn’t choose to use their framework classes and features, but instead code optimal patterns in the application code. When those patterns are not tested and supported by the formal product, no customer wins by seeing the resulting performance.

IBM has a history of competing in standard benchmarking organizations such as SPEC. We do so because such organizations are standards based and unbiased and therefore trusted. Standards-based benchmarking processes give all participating vendors equal opportunity to review run rules, implementation code, and the results of other vendors. Given this, if you find Trade and SOA benchmarks useful, maybe it is time for IBM and Microsoft to jointly propose a SOA benchmark to a standard benchmarking organization. SOA benchmarking under standard benchmarking organizations is where our customers and the industry can truly benefit.

Summary

I personally talk to many customers about performance, SOA, and web services. I stand behind all that I say technically independent of marketing. I build strong long lasting relationships with these customers, many of whom know me personally. In good faith, I can stand in front of them with results from a standards benchmarking organization. I can stand in front of them showing our SOA leadership based on both customer references and analyst reports. On the other hand, I cannot in good faith show a one-off competitive benchmark run by a single vendor. I hope you can understand this position and it helps you discuss the coverage of this Microsoft report and any similar efforts by any vendor that follow.

This Microsoft report shows basic levels of interoperability and work within the WS-I shows higher levels of interoperability. The Microsoft report points out that Microsoft has to hand code many enterprise ready features that are just taken care of for you in WebSphere technology. The title of this Microsoft report and its follow-on press coverage attempts to confuse the industry on SOA, which points out how desperate Microsoft is to get press coverage in SOA. This Microsoft report doesn’t do a good job of showing the true performance story. Specifically on performance, I encourage all customers to put their trust in standard benchmarking organizations

Tuesday, September 11, 2007

New Redbook with SOA customer case study

The Redbook does a good job of breaking down SOA implementation into a series of logical decisions, with real world implications. It covers not only the overall architecture, but the steps to get there. The final solution includes much of the WebSphere Business Process Modeling stack, including WebSphere Application Server, WebSphere Process Server, and WebSphere Portal, as well as tooling from WebSphere Integration Developer and WebSphere Business Modeler.

In particular, the Redbook shows how the SOA solutions fit into the existing ecosystem at the customer shop. SOA is evolutionary, not rip-and-replace. A good read.

Friday, August 24, 2007

SOA certification program launched

IBM Federal SOA Institute Launches IT Certification Program: IBM today announced the first Service Oriented Architecture (SOA) certification and training program for Federal IT professionals. The program was established to provide knowledge, and share important skill sets needed, for SOA development and adoption.The 12-week certification program is sponsored by the IBM Federal SOA Institute, and will begin September 12th through December 4th. A second series is scheduled for Spring 2008.

Two distinct course tracks are available: an entry level curriculum in order to become a certified SOA Associate, intended for those who are new to SOA concepts and would like to increase their overall understanding; and an advanced curriculum, to become a certified SOA Solution Designer, structured for senior IT professionals who require enhanced knowledge to deploy SOA capabilities within their organization.

Certification modules cover issues such as the value of SOA, determining return on investment, bridging the gap between technical and business teams, basic architecture, establishing solutions using existing assets and new components, identifying barriers to adoption, preparing for governance issues, and effective deployment and management within an organization.

Thursday, August 2, 2007

Web Services performance through the years

On my travels I get asked the simple question many times ... "are web services fast"?

I usually answer by showing the above graphic. Back when I started on Web Services in 2002, the answer was no. As you can see over 5 years, the answer has definitely changed to "it depends".

I say "it depends" with a little sarcasm. Obviously the performance shown in this chart tells you web services performs. However, in practice I find misuses of performance data and/or web services.

The first thing to be wary of is people trying to prove web services aren't viable are folks using "Hello World" string in/string out web services compared to legacy communication protocol xyz. I usually ask such folks how many distributed services in their architecture are string in string out with such small payloads. The answer is none. Usually the service uses complex industry standard schemas and has a small payload in with a large payload out. The above graph therefore is based on a 3k input document using banking industry standard schemas with a 10k output document.

The second thing to be wary of is primitive web services testing. The above graph is an example of primitive web services testing. This is because my team works on optimizing web services. It shows very clearly the impact of web services calling services that have zero business logic. If we optimize the performance of this primitive we will help in all scenario using web services. However, for customers you need to understand that when you add business logic, the performance impact of web services is far less of a concern. To accurately gauge performance impacts of web services in your application, I would suggest a measuring a vertical slice of your entire application, starting at the web service interface, then calling business logic, and finally any database logic. Comparing that vertical slice between web services and legacy communication protocol xyz is a useful comparison.

Lastly, don't replace every legacy communication protocol xyz in every application with web services. Web Services has been documented by almost every vendor to be useful when the service calls are coarse grained. Replacing "services" with calls that are under 1k of input with 1k of output (example a string in, a simple bean out) isn't usually a good idea. Reusable services in a SOA (for example) are typically much larger in payloads as they tend to be calling services that are not fine grained in payload or function.

Now, you may be asking two questions ...

1) How did we improve the performance over the years

2) What is the performance story for 2007 and the new Web Services Feature Pack?

I think those are two good posts to add in the next week or two ...

Friday, July 27, 2007

TCs created at OASIS for SCA

This is the result of a lot of effort from my colleagues from IBM as well as contributors from the many vendors that comprise the OSOA organization. I look forward to the enrichment of a standards process to refining this technology into the valuable, open, multilingual SOA architecture we envisioned several years ago. Great progress!! and evidence that while this technology is not rolling out as fast as some of us would like, the value proposition and promise remains strong.

Tuesday, July 24, 2007

Obligatory First Post from Andrew Spyker

I have been with IBM WebSphere Application Server for the past five years as a lead of our performance team. My time in performance has given me time to focus on many things - J2EE, Java, Security, Web Services, and more recently all things relating to our SOA foundation. Earlier this year I was promoted to STSM (Senior Technical Staff Member) and asked to broaden this focus more formally as a SOA Runtime Architect. This means I get to focus on more than performance (which I have traditionally done since performance allows you to experience all aspects of the product end-to-end) and more than WAS (ie. the rest of the SOA foundation). My focus as of late is how we can drive our XML strategy forward in both function and performance given XML is one of the core building blocks of all of our SOA runtimes. Also, I still advise the team I've built in WAS performance on daily basis. More on all of that later.

So in the end you should see a mix of content on XML, SOA, and performance from me.

Friday, July 20, 2007

WS-AT interop between WAS and WCF

Thursday, July 19, 2007

Coping with the internet crash

Breaking News: All Online Data Lost After Internet Crash

We kept telling folks to migrate the internet from those two 386 boxes in the basement in Champaign-Urbana to z/OS on the mainframe, but did they listen? No.

Tuesday, July 17, 2007

Slightly off-topic: IBM visionary retires

Article about Irving

Interview with Irving

Tuesday, July 10, 2007

WAS V6.1 - Web Services Feature Pack is available

EJB3 Beta program

I've mentioned the Web Services feature pack below. As you'd expect, the EJB3 feature pack follows the same idea - an optionally installable add-on that provides support for EJB3 applications.

Project Zero site is live

Monday, July 2, 2007

Progressive Leakiness

I wonder if when building a good abstraction, you should just acknowledge that all abstractions will be leaky and design-in the leakiness. In this pursuit, we should use the laws of Progressive Disclosure whereby the user of an interface or software is only exposed those concepts which are minimally required to accomplish the desired task. I highly subscribe to the usefulness of progressive disclosure techniques, as they tend to keep simple stuff simple and can make learning a new thing easier.

One of the painful things about abstractions is they sometimes get in your way -- a good abstraction can step aside for situations like this. An excellent example of leaky abstractions and progressive disclosure techniques that I can think of that meld very well is in the area of computer language compilers: some of the better ones expose a way for the programmer to "drop down" into assembler, right in the middle of their higher-level language constructs, and allow the programmer to tailor the code specifically for a given hardware device. This capability of drop down allows the programmer to cope with, in a very natural way, leaks like: new instructions that the higher level language (i.e. the abstraction) has not been built to specifically exploit, or even something as tailoring specific condition codes that can arise on newer hardware that need to be handled for correct code behavior. This concept demonstrates good "Progressive Leakiness" in that most programmers get the 100% value of the language abstraction not needing to be exposed to the more complex assembler language techniques (progressive disclosure) but permits specific situations to be coded for which could not be handled by the abstraction or wind up causing the abstraction to misbehave (leaky abstraction).

It seems to me that SCA might be a good candidate for a Progressive Leakiness focus. I could envision casting an SCA Reference to a JAX-WS, for instance, to do specific Web Service stuff that would not otherwise be available or even maybe appropriate through the SCA abstraction.

I don't know, maybe wrong -- I could be in need of a vacation too, I can't tell....

Monday, June 25, 2007

Web 2.0 at WebSphere Portal Technical Conference 2007

Apart from the new Web 2.0 technology added to WebSphere Portal, Lotus has Web 2.0 applications under development for collaboration and social networking: Lotus Quickr and Lotus Connections which can both be integrated with WebSphere Portal. This allows to use the portal as a central entry point providing the right content, information, processes and applications to the right people at the right time and links to and integrates with the collaboration and social networking capabilities provoided by Lotus Quickr and Lotus Connections.

These new Web 2.0 capabilities will be featured in presentations at the WebSphere Portal Technical Conference 2007 in Munich, from September 10th-12th.

The event focuses on WebSphere Portal based solutions and is organized to provide IBM customers, business partners and colleagues with latest technical and strategy directions for IBM WebSphere® Portal Version 6.0.x and Lotus technologies, including extended support for Web 2.0 services, performance enhancements and new IBM Accelerator solutions that help customers to easily create a variety of SOA based composite applications, enabling faster deployment and user productivity.

Wednesday, June 20, 2007

Using Spring with WAS and WAS ND

One of the results of this collaborative effort is an update to the paper on developerWorks that describes some of the best practices for using Spring with WAS. For example, this illustrates how Spring's declarative transaction demarcation is configured for WAS and how data sources should be declared in a Spring application context definition for injection into a transactional application.

Tuesday, June 19, 2007

A quick glance over at Rational

Honestly, I thought the questions left a lot to be desired, as if the interviewer had not done their homework before talking to Nackman (like "When will Jazz be done?", which is like asking "When will Eclipse be done?"). In spite of the questions, there's some interesting tidbits in there. For example:

Question: You said Jazz is like a middleware layer for building collaborative tools. Can you expand on that?

Answer: The way that I like to think of it is that the Jazz platform provides a middleware layer for software development and delivery. And so the way that that's related to the ESB idea is things happen and notifications need to happen. So somebody might create some sort of a work item, and somebody might be subscribed to a feed for those kinds of work items. And how does that get transported? The nice thing for us that there is so much good collaboration technology, things like RSS feeds, that exist now that we can just exploit.

Sounds like an interesting project.

Friday, June 15, 2007

Simple Ajax

AJAX is not a framework or library, it is an application pattern (although many frameworks are emerging to help, such as Dojo). An Ajax enabled page uses Javascript to send an XML request to call back to the server, the server asynchronously completes the request and sends back results, and then more Javascript in the page updates some parts of the page (rather than refreshing the entire page).

The problem is that developing AJAX based applications is hard - it requires writing complex Javascript and DHTML, and the asynchronous nature of the pattern further complicates debugging. There are also a series of other problems that have solutions - but are easier to get wrong, than they are to get right: Making the browser's 'back' button work is tough; Javascript has a tendency to leak memory; you must test your code on many browser variants, etc. As noted, frameworks that will help are starting to emerge, most notably Dojo - but they are still immature, and still require that you write JavaScript code in your page.

WebSphere and RAD have supported JSF (Java Server Faces) based applications for several releases now, and the combination of JSF and the JWL component library we ship offers a powerful combination that simplifies the development of applications. What's new is that RAD v7 has introduced some common AJAX behaviors into our JSF component library; you can now provide capabilities such as scrolling data tables with no page refresh, dynamic menus, and entry fields that support type ahead. The nice part is that none of this function requires you (the developer) to write any JavaScript - the bulk of the function is encapsulated in the JWL component library, and the tiny snippets that are required can be generated for you by RAD. In turn, this means you get to avoid most of the problems listed above - but still provide the enhanced interaction that people are starting to expect from modern web pages.

JSF based pages can co-exist quite happily with "normal" JSP and HTML pages, so there is no requirement for you to make a huge investment and redo your entire site. Instead, you can update just the pages that you wish to modernize, and provide the basic interaction patterns that are the hallmark of "Web 2.0". There are several resources available to help you understand the new capabilities, but a couple of articles on developer works provide a good starting point. The first one is "JSF and Ajax: Web 2.0 application made easy" and provides a general overview, and a second article titled "Improve the usability of Web applications with type-ahead input fields" walks you through adding typeahead to a JSF based page.

As Ajax continues to mature, frameworks such as Dojo will continue to add value to the development of advanced web pages - but the capability that's available in RAD v7 and WAS 6.1 today is more than enough to allow you to provide your customers with a modern, interactive site, without forcing your developers to go through a painful development process.

Tim Francis

Thursday, June 14, 2007

Multi-Cores, SOA, SCA and the Incredible Lightness of Being

Whoa. Where to start. I can say the article made me think, does SOA, or SCA for that matter really help applications deal with multi-core processors? Far be it for me to criticize semantic English, but multi-core processors imply same-box, and the focus of SOA and SCA relate to the wider, distributed network; which may be comprised of multi-core processor nodes. Certainly SOA does help application developers in arriving at application patterns and best practices that lend themselves to being advantaged by multi-core processors, or even more importantly a set of distributed processes and processors. Simply using container-based applications like JEE or even Spring put the majority of the burden of thread management in the hands of the middleware, where presumably there are appropriate controls and exploitation of multi-core processors have been meticulously built; that really doesn't have much to do with SOA or SCA. Billy Newport has blogged numerous times about applications and multi-core issues as well. While there has been lots of research in predictive instruction execution and pipelining, SCA offers more like a circuit board assembly than a flow chart (perhaps like BPEL) and its model doesn't really imply any ordering or sequencing which would afford any great advance in multi-tasking. Certainly SCA has asynchronous invocation concepts which would allow for a greater opportunity for muli-tasking, but I would hardly say they are transparent to the application.

To cut Andy a break, perhaps what he intends to say is the SOA and SCA programming style of providing declarative dependencies and policies allow the runtimes to better understand the application and therefore, de facto have a better chance of exploiting the hardware available to solve the problem.

The article then diverges into other topic areas for which I also have some comments.

- Java is certainly an important language for SCA to embrace and to treat in a first class manner, but it is hardly the only language. Certainly at least BEA and IBM and keenly interested in SCA assemblies with BPEL implementations, but there is lots of activity in other languages. Of course WebSphere is going to focus on Java -- it is a Java runtime. What will be interesting is how vendors choose to slice and dice the type of component implementations they support and in what form factor they will deliver that support.

- I didn't quite understand his comments about requiring a higher-level intermediary to embrace WCF components. Certainly WCF components can consume or be exposed as Web Services, using WSDL and XML for interface definitions -- SCA embraces both service consumers and providers. Perhaps I'm missing some other point Andy is trying to make. Certainly, as I've blogged about before, SCA embraces existing (legacy) technology and I don't understand why anything would have to be rewritten. This seems just wrong to me.

- I don't agree with the statement that the vendors believe SCA is needed to achieve "tru(e) platform independence." What SCA allows you to do is to compose and assemble applications written in a variety of implementation languages, hosted on a variety of platforms and insulates the business logic developer from having to have unnecessary knowledge of these implementations.

- Microsoft is not interested in application portability, so certainly aspects of SCA are not interesting to them; however, the author does seem to be unaware that SCA, albeit in its original proprietary, has been shipping in WebSphere Process Server 6.0 for some time now.

- Service Data Object is not an optional subset of SCA. SDO is orthogonal to SCA, but is rather complementary... to be honest, I just don't know what Andy was trying to articulate in his closing paragraph about SDO.

Monday, June 11, 2007

Integration of Google Gadgets in WebSphere Portal

The IBM Portlet for Google Gadgets is available for WebSphere Portal customers to download from here: http://catalog.lotus.com/portal?NavCode=1WP1001AJ

It allows portal administrators or users to easily select which Google Gadgets they want to integrate in portal pages using an AJAX-based user interface giving access to Google's gadget catalog. The selected gadget is then displayed on the page exactly like any local portlet and can be customized by end users like any local portlet, i.e. for end users, the integration of Google Gadgets in WebSphere Portal is seamless.

More information on this topic can be found here.

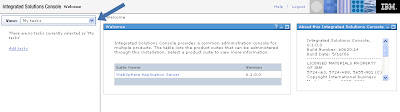

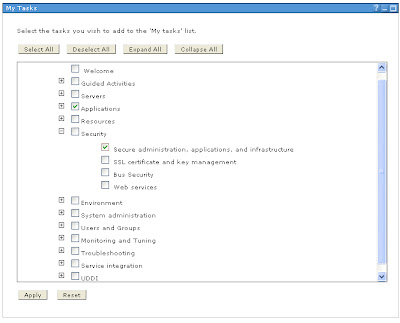

"My Tasks" in WAS V6.1

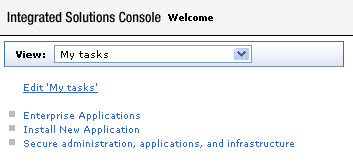

Assuming you've never been here before, it prompts you to add your first task. Clicking that options gives you a lit of navigation options. You can either choose an entire category (like "Applications") or a sub-category (like "Secure administration, applications, and infrastructure"):

After clicking Apply, voila! You now have a customized navigation that only shows what you care about.

Thursday, June 7, 2007

It's a Dessert Topping AND a Floor Wax

Of course SCA defines a new programming model, however, it also specifically defines an invocation model, a component model, and an SOA assembly model which embraces other, non-SCA programming models. Blur in the fact that the term "programming model" has specific language connotations (at least in my mind), while SCA and its cousin SDO were designed to be specifically language neutral.

What we need for building SOA applications is a contract to describe dependencies, policies and implementation with as little knowledge of or need to impact existing business logic. It needs to lift us out of the computer science goo and into building business logic that is meaningful to the business.

The fact that one can or cannot use SCA with or without another container, or as a client with any at all is less important in my mind as the importance of its design points of separation of concerns and insulating the business logic from specific protocol and data bindings which lead to fragile, inflexible applications.

Steve Kinder

Tuesday, June 5, 2007

IBM WSRP Producer for IBM WebSphere Application Server

The IBM WSRP Producer for WAS is a light weight WSRP producer which can expose JSR168 portlets deployed on IBM WebSphere Application Server Version 6.1 as WSRP services. These services can be integrated into any spec-conformant WSRP Consumer like IBM WebSphere Portal Version 6, IBM WebSphere Portal Version 5.1, as well as portal products by other vendors. The WSRP Producer for IBM WebSphere Application Server is stateless (with the exception of session data) and pushes portlet customization to the WSRP Consumer (client).

The code can be downloaded from http://catalog.lotus.com/portal?NavCode=1WP1001BA

More information on WSRP can be found at http://www.oasis-open.org/committees/wsrp/

Monday, June 4, 2007

Just for Terry

Certainly, we Webspherian's can learn a few lessons. Einstein is attributed to say, " Everything should be made as simple as possible, but not simpler. "

Steve Kinder

Friday, June 1, 2007

It really is Christmas Early... or is that Holiday Season?

If you haven't heard of SCA and SDO, where have you been? :-) We are very interested in getting feedback on what we are doing right, but especially what we are doing wrong with these new SOA related programming and assembly model concepts.

Happy Coding...

Steve Kinder

p.s. I forgot to mention that the SOA Feature Pack installation also installs the Web Service Feature Pack, as we base our Web Service support on the new AXIS-2 runtime. If you happen to download the SOA Feature Pack, you get both feature packs.

Thursday, May 31, 2007

The new drop of the Web Services feature pack beta is available

The beta, like the eventual GA version of the feature pack, is an optionally installable package. If you need the latest web services standards, you can add them onto WebSphere Application Server V6.1. If you don't, you can ignore it.

The latest beta is basically a fully functional version of the feature pack that is going through additional testing now. The feature pack has support for:

- Web Services Reliable Messaging (WS-RM)

- Web Services Addressing (WS-Addressing)

- SOAP Message Transmission Optimization Mechanism (MTOM)

- Web Services Secure Conversations (WS-SC)

- Java API for XML Web Services (JAX-WS 2.0)

- Java Architecture for XML Binding (JAXB 2.0)

- SOAP with Attachments API for Java (SAAJ 1.3)

- Streaming API for XML (StAX 1.0)

Wednesday, May 30, 2007

My surreal work experience for the day

So here's what Bob Muglia, Microsoft's senior vice president for the Server and Tools division, had to say:

After I picked myself off the floor, I asked myself, "Why would eWeek allow itself to be used like this?" Clearly, this was simply a marketing move, not a signal of genuine intention to work with IBM. If Microsoft really wanted to work with IBM on interoperability, would it launch this activity by telling eWeek that the goal was for Microsoft to help IBM with our implementation? And without a response from IBM? This is not a news story, it's an advertisement."[Our customer council] basically told me, pretty directly, that while Microsoft's implementation was in great shape, IBM's and others were not, and that Microsoft needed to do a better job helping them do a better implementation," Muglia said. "And I had to think about that, as it is one thing for us to work with customers around interoperability, but quite another to go out and help a competitor build a better product to enable interoperability."

But Microsoft has now decided to go and talk to IBM and BEA Systems and a few others to help improve and define their interoperability. "Ultimately these guys have to make their products good, but there is a lot we can do working with them to make their products interoperate better with us," Muglia said.

IBM could not be immediately reached for comment on Muglia's remarks or its thoughts about improving interoperability with Microsoft.

SO-UH... IT SOA Part 4. No, really separation of concerns.

To really achieve a separation of concerns between the service consumer and service provider, it is often beneficial to introduce the concept of service virtualization. Service virtualization is the concept that we don't actually publish the concrete service provider to consumers, we publish an intermediary which exposes the formal service interface and business contract. In its simplest form, the intermediary is really just a pass-thru which merely passes parameters and context to the real, concrete service provider implementation. In more complicated forms, dynamic decisions can be added to the intermediary to choose the most appropriate service provider implementation. A very simple business example is the famous "getStockQuote" service. Some stock quotes are delayed 20 minutes but are free to obtain; whereas real time quotes usually cost the consumer a small fee to obtain. Both provide the same service, one has a greater expense to the business, but better quality of service. This intermediary is sometimes called a mediation.

Service virtualization is one of several beneficial aspects of Enterprise Service Bus patterns.

Bottomline: Separation of concerns is a good thing to build flexible, agile applications. Best practices and newer programming constructs can achieve a good deal of separation of concerns. Service virtualization complements these concepts, and extends separation of concerns to older programming styles, patterns, languages. Service virtualization facilitates us embracing legacy applications, utilizing them where they are hosted.

Steve Kinder

Tuesday, May 29, 2007

Web Service Transaction support in WAS

WAS has provided support for WS-AT 1.0 and WS-BA 1.0 (the input specifications to the OASIS WS-Transaction Technical Committee) since 6.0 and 6.1 respectively.

WS-AT support in WAS (since 6.0)

WS-AT is suited to short-running, tightly-coupled services due to its 2PC nature and the consequence that participants/resources are held in-doubt during the second phase of 2PC. It is used to distribute an atomic transaction context between multiple application components such that any resources (e.g. databases, JMS providers, JCA resource adapters) used by those components are coordinated by WAS (using XA) to an atomic outcome. It is typically used between components deployed within a single enterprise where there are two primary scenarios requiring WS-AT:

- A SOA deployment requiring atomic transactional outcomes between two or more service components.

- Transaction federation between heterogeneous runtime environments. WAS WS-AT interoperability has been tested with CICS and with Microsoft .NET 3.0 framework in Windows Vista.

WS-AT is to Web services as Object Transaction Service (OTS) is to remote EJBs and in WAS neither of these require any coding, on the part of the application developer, to use. WAS ensures that the transaction context of the requester component is established by the container of the target component regardless of whether the target is invoked as an EJB (using the EJB remote interface) or a Web service (using JAX-RPC). The transaction can be started at the requester using the standard UserTransaction interface or, in the case of an EJB, as a standard EJB container-managed transaction; WAS takes care of deciding whether to use OTS or WS-AT to propagate that transaction context on remote requests depending on the type of invocation - no Java coding is required. If the requester is a Web service client then the application assembler simply needs to indicate, when assembling the requester component in AST or RAD, that Web Services Atomic Transaction should be used on requests, as described in the task Configuring transactional deployment attributes in the InfoCenter.

Distributed transactions in WAS, whether distributed between remote EJBs using OTS or Web services using WS-AT, benefit from WAS transaction high-availability (HA) support. In a non-HA WAS configuration, transaction recovery following a server failure occurs when the WAS server is restarted. In a WAS HA configuration, transaction recovery may also occur when a failed server restarts but in-doubt transactions can also be peer-recovered by an active server (which is processing its own, separate, transactional workload) in the same core group. Configuring WAS for transactional high availability is described in the InfoCenter topic Transactional high availability and also in a dedicated paper with some additional background, Transactional high availability and deployment considerations in WebSphere Application Server V6.

There is further information on WS-AT in the topic Web Services Atomic Transaction support in WebSphere Application Server in the InfoCenter.

Additional features of the WS-AT support in WAS 6.1

A documented limitation with the WAS 6.0 WS-AT support is that the WAS WS-AT context cannot be propagated through a firewall. Actually, the context itself can be propagated through a firewall but the participant registration protocol flow that follows typically cannot. Since WS-AT is primarily used within a single enterprise this is not too onerous a restriction but it clearly limits the topologies in which WS-AT can be used in WAS 6.0. This limitation is removed in WAS 6.1 where the WS-AT service can be configured with the hostname of the HTTP(S) proxy server to which protocol messages should be sent for routing to the WS-AT service. While any generic HTTP proxy can be used, there are additional capabilities built into the WebSphere Proxy Server (shipped as part of WAS ND) that recognize WS-AT context and WS-AT protocol messages to provide edge-of-domain support for WS-AT high-availability and work-load-management, essentially extending these capabilities to non-WAS clients. The InfoCenter has more information on this in the topic Web Services transactions, firewalls and intermediary nodes.

WS-BA support in WAS (since 6.1)

WS-BA is appropriate for long-running and loosely-coupled services. Its compensation model means that resource manager locks are not held for the duration of the transaction, as a consequence of which intermediate results are exposed to other transactions.But WS-BA is not exclusively for use by long-running applications - short-duration, tightly-coupled applications can benefit from a compensating transaction model just as much. Scenarios where short-running applications may chose a compensating rather than ACID model include:

- the use of resources which cannot be rolled back as part of a global (2PC) transaction. For example, an application that sends an email cannot roll this back after it has been sent - but it could send a follow-up email. Other examples of non-2PC resources are LocalTransaction/NoTransaction resource adapters (RAs) in J2EE.

- avoidance of in-doubt transactions in resource managers, where the application wishes for an atomic outcome but can tolerate intermediate state being available to other transactions.

WAS 6.1 introduces a construct called a BAScope which is essentially an attribute that can be added to any WAS core unit of work (UOW), for example a global transaction (e.g. started by the EJB container for an EJB using container-managed transactions (CMT) or by a bean using the JTA UserTransaction API). A BAScope associates a Business Activity with whatever WAS core UOW an application runs under, and has a context that is implicitly propagated on remote calls made by that application. If the remote calls are Web service calls then the BAScope context is propagated as a WS-BA CoordinationContext. If the remote calls are RMI/IIOP EJB calls then the BAScope is propagated in an IIOP ServiceContext encapsulation of a WS-BA CoordinationContext (which is actually a CORBA Activity Service context as mentioned in the footnote of this post).

Any deployed WAS component runs in a WAS core UOW scope - for example a global transaction or a local transaction containment (which is the WAS environment for what the EJB spec refers to as an "unspecified transaction context"). Any resources (JDBC, JMS, JCA RAs) used by the component are accessed in the context of that UOW. For example, an EJB with a transaction attribute of "RequiresNew" accesses resources in the scope of a global transaction. So here's what is new with BAScopes: any EJB deployed in WAS 6.1 or later may be assembled to have a BAScope associated with its core UOW, where the BAScope has the same lifecycle as the core UOW to which it is associated and completes in the same direction. An EJB that runs under a BAScope (regardless of the core UOW) may be configured (through assembly) with a compensation handler, which is a plain old Java object that implements the com.ibm.websphere.wsba.CompensationHandler interface methods close and compensate. The compensation handler logic needs to be provided as part of the application (and is assembled into the application EAR file) but it is the WAS BAScope infrastructure which ensures that the compensation handler gets driven appropriately when the BAScope ends, regardless of any process failures. The BAScope infrastructure also persists any compensation instance data provided by the EJB during forward processing for later use by the compensation handier.

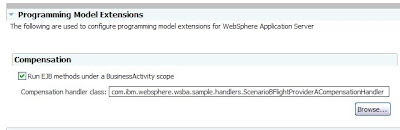

The figure below shows the AST pane for adding a BAScope compensation handler to an EJB

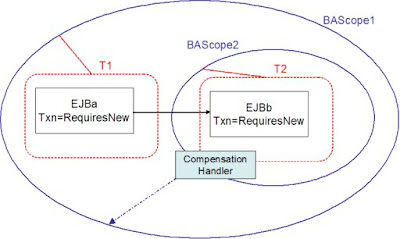

In the above example, the ScenarioBFlightProviderACompensationHandler application class implements close and compensate methods. If the EJB's transaction is rolled back, then the compensate method will be driven which is an opportunity to compensate any non-transactional work. If the EJB's transaction is committed then the WAS BAScope support either promotes the compensation handler to any parent BAScope or, if there is no parent, drives the close method (which might typically perform some simple clean-up or else just be a no-op). BAScope parent-child relationships are managed by WAS and can be used to compensate work previously committed as part of a global transaction. For example, if EJBa running under transaction T1 calls EJBb running under transaction T2 and both EJBs are configured to run under a BAScope, then the BAScope associated with T2 is a child of the BAScope associated with T1 as illustrated in the diagram below.

EJBb can define a compensation handler class and register some data relevant to transaction T2. If T2 commits, then that compensation handler (and compensation data) is promoted to BAScope2. If EJBa then hits an exception so that its transaction T1 is rolled back, the compensation handler is called to compensate for the work previously committed in T2.

For further information on WS-BA, you can read the topic Transaction compensation and business activity support in the InfoCenter. There is a also a simple and yet comprehensive WAS 6.1 WS-BA sample that can be downloaded from developerWorks.

It should be noted that the compensation support in WAS 6.1 is focused exclusively on tightly-coupled components using a synchronous request-response interaction pattern and has no notion of any business process choreography associated. More complex compensation support for microflows and BPEL processes, including WID development tooling, requires WebSphere Process Server.

Footnote: WAS BAScopes and standards

A little while ago I wrote a short piece on the heritage of WS-Coordination and its relationship with the CORBA Activity service and the J2EE Activity service (JSR 95). When we designed the BAScope feature for WAS 6.1, we chose to use the J2EE Activity service architecture as the basis for our WS-BA implementation. The WAS 6.1 BAScope support is architected as a J2EE Activity "High Level Service" (HLS). This is purely internal and has no impact on applications that use BAScope interfaces - we already had, in WAS, this extensible Activity service infrastructure (which is also used as the basis for WAS ActivitySession support ) and simply built on this for our BAScope support. So while the WAS BAScope Java application programming interface is WAS-specific, the BAScope support itself is built upon open standards specifications. As mentioned earlier, BAScope contexts distributed between Web services use a standard WS-BA CoordinationContext and BAScope contexts distributed between EJBs use a standard CORBA CosActivity::ActivityContext service context.

-- Ian Robinson

Friday, May 25, 2007

Darryl K. Taft comments on IMPACT 2007

Darryl K. Taft at eWeek has a new article called "IBM Shows Impact of SOA". Excerpt:

Steve Mills, senior vice president, IBM Software, in a statement, said that "SOA has been a growth engine for IBM, as well as our customers, because it gives companies the much-needed flexibility to focus on achieving business results without being hindered by the constructs of established infrastructures. IBM's differentiation is in its ability to address business challenges using the right balance of business and technical skills along with an unmatched, multipronged approach to meeting customers' needs."

Mills also said he sees the worlds of Web 2.0 and SOA coming together to offer new opportunities for both vendors and users.

"We're bringing the people impact into the picture and leveraging things like RSS and Atom," Mills said. Web 2.0 technologies are bringing more usability, "consumability" and user-driven content into the equation, he said.

It's interesting to me that both Sandy Carter's book and Steve Mills' statement tied together SOA and Web 2.0. At first glance, it seems like the only thing they have in common is that they both are big buzzwords... and hey, why use one buzzword when you can use TWO! But looking a little deeper, I think in some ways Web 2.0 technologies are doing a great job of delivering SOA, particularly with regard to mashups. Stitching together a quick mashup using a bunch of existing services is very SOA. It also shows very clearly that SOA is not a technology offering. It's a way of doing business. Whether you use AJAX and JSON or EJB3 and web services, you can choose to architect your solutions using SOA principles.

Book review:"designing the obvious" by Robert Hoekman, Jr.

I recently finished Robert Hoekman's new book, "designing the obvious: a common sense approach to web application design". I enjoyed the book and thought that Hoekman had some good insights into what it means to produce quality design, though I also had some disagreements and found some of his advice to be of limited value in the domain of enterprise software.

One of the things that liked best was the section on "understanding users". This has long been a pet peeve of mine -- while obviously good designers needs to understand their users, I've often felt that running usability tests on design were of limited value. And I think the issue is that we often ask the wrong questions. Hoekman has a great summary of this problem:

For example, if I were designing and building an application used to track sales trends for the Sales department in my own company, my first instinct... would be to hunt down Molly from Sales and ask her what she would like the application to do. Tell me, Molly, how should the Sales Trends Odometer display the data you need?

I would never ask my father the same type of question. My father knows next to nothing about how Web sites are built, what complicated technologies lie lurking beneath the pretty graphics, or the finer points of information architecture. He wouldn't even be able to pick a web application out of a lineup, because he doesn't understand what I mean by the word application...

What my father does know is that the model rockets he builds for fun on weekends require parts that he has to hunt down on a regular basis... If I told my dad he could buy these parts online, he'd be interested. If I asked him how the application should filter the online catalog and allow him to make purchases, he'd look at me like I was nuts.

Molly from Sales would likely do the same thing.

Molly has no idea what your software might be able to do, and she almost certainly doesn't have the technical chops to explain it.

I think this is a great point. We need to understand our users and we need to understand how the use the product (or use of proposed new designs), but we should not be asking them to design our product. We're the professional software designers - we shouldn't abdicate our responsibilities onto the user. It's nice to Hoekman saying that in print.

I also liked what Hoekman had to say about turning beginners into intermediates. Basically, the biggest proportion of most products' user base is the "perpetual intermediate". Someone who has learned just enough to get by, and has no plans to ever learn more. One of our goals is to help beginners reach this stage. WAS does quite a bit in this space, perhaps most notably in our "Command Assist" functionality in the console, which allows people to transition easily from using the console to using scripting.

The only major problem I had with the book, and it's not all that major, is that it was written with an assumption that bad design happens out of ignorance for many of the principles he is espousing. That people are not doing things the right way because they don't know the difference between the right way and the wrong way. Clearly, this is sometimes true, but I think it's the exception to the rule (at least it is the exception when there are professional usability people involved in the project... and the audience for the book seems to be professional usability people). The simple fact is that there is a cost associated with improving the design of a feature or a product. Designing things well takes more time and people. It usually means that the system is taking on more of the complexity from the user, which requires more coding and testing. Everyone wants good design, but when push comes to shove, I think many of our customers would admit that if better design means fewer features and longer time to market... well, it's not a no-brainer that better design should win the day. It's a balance. Sometimes better design is the right answer, and sometimes time-to-market pressures are too important to lengthen the release cycle to include an expensive, iterative design phase. Be able to tell the difference between those cases is the trick, and not an easy one. But the point is that the "obvious" design techniques that Hoekman discusses are already common design techniques in most shops... when there's time to do them.

But overall I thought it was an excellent book, especially for people who are trying to go from beginner to intermediate in their design skills. It's well-written and engaging, and that counts for a lot.

Jython scripting improvements in WAS V6.1

Two things we hear all the time from customers:

1. Scripting is used extensively and sometimes exclusively for managing production environments. In many shops, scripting is the primary interface to the WAS product.

2. Scripting is hard.

In WAS V6.1, we took a major step forward in improving the user experience for scripting by adding a Jython editor to the Application Server Toolkit, that provides the type of "code assist" that developers have come to expect in other languages.

I'm compelled to note that the script editor does not support Jacl. We're encouraging customers to move from Jacl to Jython, and this Jython editor is one way to encourage that movement.

Musings about Sandy's Carter's new SOA book

From a user experience standpoint, I'm a "true believer" when it comes to SOA. I think SOA is exactly the right approach to make real world improvements in the amount of complexity that users need to consume in order to be productive. And I use those words very carefully, because I am of the school of thought that "complexity" is a zero-sum game. Those of us in user experience never eliminate complexity, we just move it around. SOA gives us an architecture to move around complexity in a more efficient manner, so that users can be productive in their particular job without needing to understand the complexity of the entire ecosystem.

So when I picked up Sandy Carter's new book, "The New Language of Business: SOA & Web 2.0", I was interested to see if she would touch on this perspective. Clearly, SOA is a vast topic that can be tackled from many different angles, so there was no guarantee that user experience would be touched at all.

Naturally, given her background, Sandy focuses on the business side of the SOA equation. She hammers home the points that being flexible and responsive requires an alignment of business needs and IT needs, and neither can do the job by themselves. In addition, she makes it clear that this is nothing new... from Henry Ford to McDonald's, flexibility, responsiveness, and efficiency have always been of major concern to enterprises. What has changed now is the tools available to take this to the next level, such as standards-based technology to make interoperability an assumption rather than a herculean effort. In particular, I enjoyed the case studies that described how real companies were adopting and benefiting from SOA approaches (including IBM). The one thing that stood out in these case studies was their diversity. Every company approached SOA differently, based on their business needs, their IT skills and architecture, and their process maturity. This is yet another reason why I appreciate SOA (and IBM in general) -- we don't try to claim that there are one-size-fits-all solutions to any of the problems facing enterprise customers today. And Sandy clearly did not try to make SOA appear easier than it really is.

But what about user experience? I'm happy to say that the book touched on user experience topics in several places, though it wasn't a major theme. When it was mentioned, she tended to focus on the user experience of the end user, rather than the implementers. Again, this is not surprising given that the focus of the book is about the business justification for going to a SOA architecture, and clearly one of those benefits is to allow customers to create a more seamless experience for their end users. For example, here's an excerpt from one of the case studies:

Standard Life group of companies Plc, headquartered in Edinburgh in the U.K., has become one of the world's leading financial services companies. The majority of Standard Life group of companies' business and revenue is generated through independent financial advisors (IFAs) that help their customers select financial and insurance products from a number of different assurance companies. many IFAs utilize industry-sponsored portals to obtain product information, compare prices from multiple providers, and provide a single view of a customer's holdings.

Standard Life group of companies realized that, to remain competitive, it needed to offer its IFAs easier, more flexible, and quicker online access to its financial information. Standard Life group of companies also needed to reduce the cost of doing business with multiple business channels. By reducing its costs, not only could it improve its bottom line, but it also could improve its competitive standing and its relationships with its IFAs; more self-service and quicker processes via automation could help the IFAs improve their margins.

This is a message repeated in several places throughout the book - that SOA techniques can allow our customers to provide a better user experience for their customers. But I would also add that SOA can provide a better user experience for IBM's middleware customers as well, namely because it will allow them to pick and choose who in their organization is capable of handling the most complexity, and architecting their solutions accordingly.