The OASIS standards consortium recently

announced the completion of the WS-Transaction 1.1 standard which specifies Web services protocols for 2 well-known transaction models: WS-AtomicTransaction (WS-AT) for atomic two-phase commit (2PC) transactions and WS-BusinessActivity (WS-BA) for compensating transactions.

WAS has provided support for WS-AT 1.0 and WS-BA 1.0 (the input specifications to the OASIS WS-Transaction Technical Committee) since 6.0 and 6.1 respectively.

WS-AT support in WAS (since 6.0)

WS-AT is suited to short-running, tightly-coupled services due to its 2PC nature and the consequence that participants/resources are held in-doubt during the second phase of 2PC. It is used to distribute an atomic transaction context between multiple application components such that any resources (e.g. databases, JMS providers, JCA resource adapters) used by those components are coordinated by WAS (using XA) to an atomic outcome. It is typically used between components deployed within a single enterprise where there are two primary scenarios requiring WS-AT:

- A SOA deployment requiring atomic transactional outcomes between two or more service components.

- Transaction federation between heterogeneous runtime environments. WAS WS-AT interoperability has been tested with CICS and with Microsoft .NET 3.0 framework in Windows Vista.

WS-AT is to Web services as Object Transaction Service (OTS) is to remote EJBs and in WAS neither of these require any coding, on the part of the application developer, to use. WAS ensures that the transaction context of the requester component is established by the container of the target component regardless of whether the target is invoked as an EJB (using the EJB remote interface) or a Web service (using JAX-RPC). The transaction can be started at the requester using the standard UserTransaction interface or, in the case of an EJB, as a standard EJB container-managed transaction; WAS takes care of deciding whether to use OTS or WS-AT to propagate that transaction context on remote requests depending on the type of invocation - no Java coding is required. If the requester is a Web service client then the application assembler simply needs to indicate, when assembling the requester component in AST or RAD, that Web Services Atomic Transaction should be used on requests, as described in the task Configuring transactional deployment attributes in the InfoCenter.

Distributed transactions in WAS, whether distributed between remote EJBs using OTS or Web services using WS-AT, benefit from WAS transaction high-availability (HA) support. In a non-HA WAS configuration, transaction recovery following a server failure occurs when the WAS server is restarted. In a WAS HA configuration, transaction recovery may also occur when a failed server restarts but in-doubt transactions can also be peer-recovered by an active server (which is processing its own, separate, transactional workload) in the same core group. Configuring WAS for transactional high availability is described in the InfoCenter topic Transactional high availability and also in a dedicated paper with some additional background, Transactional high availability and deployment considerations in WebSphere Application Server V6.

There is further information on WS-AT in the topic Web Services Atomic Transaction support in WebSphere Application Server in the InfoCenter.

Additional features of the WS-AT support in WAS 6.1

A documented limitation with the WAS 6.0 WS-AT support is that the WAS WS-AT context cannot be propagated through a firewall. Actually, the context itself can be propagated through a firewall but the participant registration protocol flow that follows typically cannot. Since WS-AT is primarily used within a single enterprise this is not too onerous a restriction but it clearly limits the topologies in which WS-AT can be used in WAS 6.0. This limitation is removed in WAS 6.1 where the WS-AT service can be configured with the hostname of the HTTP(S) proxy server to which protocol messages should be sent for routing to the WS-AT service. While any generic HTTP proxy can be used, there are additional capabilities built into the WebSphere Proxy Server (shipped as part of WAS ND) that recognize WS-AT context and WS-AT protocol messages to provide edge-of-domain support for WS-AT high-availability and work-load-management, essentially extending these capabilities to non-WAS clients. The InfoCenter has more information on this in the topic Web Services transactions, firewalls and intermediary nodes.

WS-BA support in WAS (since 6.1)

WS-BA is appropriate for long-running and loosely-coupled services. Its compensation model means that resource manager locks are not held for the duration of the transaction, as a consequence of which intermediate results are exposed to other transactions.But WS-BA is not exclusively for use by long-running applications - short-duration, tightly-coupled applications can benefit from a compensating transaction model just as much. Scenarios where short-running applications may chose a compensating rather than ACID model include:

- the use of resources which cannot be rolled back as part of a global (2PC) transaction. For example, an application that sends an email cannot roll this back after it has been sent - but it could send a follow-up email. Other examples of non-2PC resources are LocalTransaction/NoTransaction resource adapters (RAs) in J2EE.

- avoidance of in-doubt transactions in resource managers, where the application wishes for an atomic outcome but can tolerate intermediate state being available to other transactions.

WAS 6.1 introduces a construct called a BAScope which is essentially an attribute that can be added to any WAS core unit of work (UOW), for example a global transaction (e.g. started by the EJB container for an EJB using container-managed transactions (CMT) or by a bean using the JTA UserTransaction API). A BAScope associates a Business Activity with whatever WAS core UOW an application runs under, and has a context that is implicitly propagated on remote calls made by that application. If the remote calls are Web service calls then the BAScope context is propagated as a WS-BA CoordinationContext. If the remote calls are RMI/IIOP EJB calls then the BAScope is propagated in an IIOP ServiceContext encapsulation of a WS-BA CoordinationContext (which is actually a CORBA Activity Service context as mentioned in the footnote of this post).

Any deployed WAS component runs in a WAS core UOW scope - for example a global transaction or a local transaction containment (which is the WAS environment for what the EJB spec refers to as an "unspecified transaction context"). Any resources (JDBC, JMS, JCA RAs) used by the component are accessed in the context of that UOW. For example, an EJB with a transaction attribute of "RequiresNew" accesses resources in the scope of a global transaction. So here's what is new with BAScopes: any EJB deployed in WAS 6.1 or later may be assembled to have a BAScope associated with its core UOW, where the BAScope has the same lifecycle as the core UOW to which it is associated and completes in the same direction. An EJB that runs under a BAScope (regardless of the core UOW) may be configured (through assembly) with a compensation handler, which is a plain old Java object that implements the com.ibm.websphere.wsba.CompensationHandler interface methods close and compensate. The compensation handler logic needs to be provided as part of the application (and is assembled into the application EAR file) but it is the WAS BAScope infrastructure which ensures that the compensation handler gets driven appropriately when the BAScope ends, regardless of any process failures. The BAScope infrastructure also persists any compensation instance data provided by the EJB during forward processing for later use by the compensation handier.

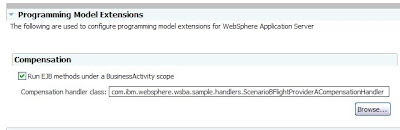

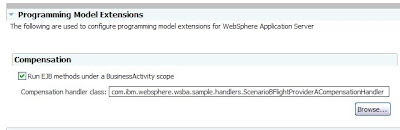

The figure below shows the AST pane for adding a BAScope compensation handler to an EJB

In the above example, the

ScenarioBFlightProviderACompensationHandler application class implements

close and

compensate methods. If the EJB's transaction is rolled back, then the

compensate method will be driven which is an opportunity to compensate any non-transactional work. If the EJB's transaction is committed then the WAS BAScope support either promotes the compensation handler to any parent BAScope or, if there is no parent, drives the

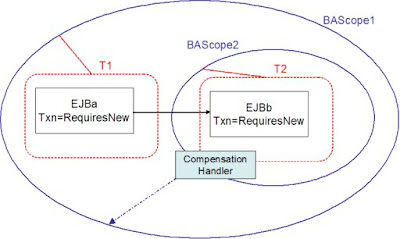

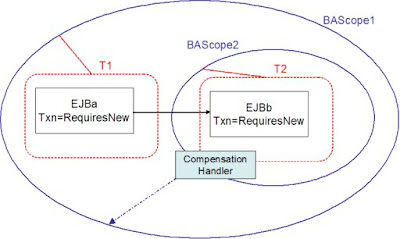

close method (which might typically perform some simple clean-up or else just be a no-op). BAScope parent-child relationships are managed by WAS and can be used to compensate work previously committed as part of a global transaction. For example, if EJBa running under transaction T1 calls EJBb running under transaction T2 and both EJBs are configured to run under a BAScope, then the BAScope associated with T2 is a child of the BAScope associated with T1 as illustrated in the diagram below.

EJBb can define a compensation handler class and register some data relevant to transaction T2. If T2 commits, then that compensation handler (and compensation data) is promoted to BAScope2. If EJBa then hits an exception so that its transaction T1 is rolled back, the compensation handler is called to compensate for the work previously committed in T2.

For further information on WS-BA, you can read the topic Transaction compensation and business activity support in the InfoCenter. There is a also a simple and yet comprehensive WAS 6.1 WS-BA sample that can be downloaded from developerWorks.

It should be noted that the compensation support in WAS 6.1 is focused exclusively on tightly-coupled components using a synchronous request-response interaction pattern and has no notion of any business process choreography associated. More complex compensation support for microflows and BPEL processes, including WID development tooling, requires WebSphere Process Server.

Footnote: WAS BAScopes and standards

A little while ago I wrote a short piece on the heritage of WS-Coordination and its relationship with the CORBA Activity service and the J2EE Activity service (JSR 95). When we designed the BAScope feature for WAS 6.1, we chose to use the J2EE Activity service architecture as the basis for our WS-BA implementation. The WAS 6.1 BAScope support is architected as a J2EE Activity "High Level Service" (HLS). This is purely internal and has no impact on applications that use BAScope interfaces - we already had, in WAS, this extensible Activity service infrastructure (which is also used as the basis for WAS ActivitySession support ) and simply built on this for our BAScope support. So while the WAS BAScope Java application programming interface is WAS-specific, the BAScope support itself is built upon open standards specifications. As mentioned earlier, BAScope contexts distributed between Web services use a standard WS-BA CoordinationContext and BAScope contexts distributed between EJBs use a standard CORBA CosActivity::ActivityContext service context.

-- Ian Robinson