Two weeks ago, I said I'd be doing a weeklong series of posts on WAS V7.0 performance. A week late, but here we go. I guess I can say I delayed until WAS V7.0 was generally available, which it is now (trial download here)! WAS V7.0 should be available by other supported download methods as well.

There are many impressive areas of performance improvements in WAS V7.0, but I'll start with the one nearest and dearest to my heart - Web Services. In the past, I talked about web services performance through the years. I mentioned a possible follow-up blog post on what Feature Pack for Web Services performance. I never followed up on that (hrm, a theme in my slow blogging), but now that the same code is available in WAS V7.0, I will. In fact, we've made even more improvements to web services performance in WAS V7.0 that would truly make the "performance through the years" chart from my previous blog post much more impressive.

WAS V6.1 supports the J2EE 1.4 JAX-RPC web service programming model. Last year, we added Java EE 5 support for the JAX-WS web service programming model (expanded functionality, standards support, easier programming model, and enhanced management support for services) through the Feature Pack for Web Services. While WAS V7.0 continues support of both the JAX-RPC and JAX-WS programming models so that existing JAX-RPC web services applications deployed on previous versions of WebSphere will run on V7 unmodified, the development and performance resources were almost exclusively dedicated to the JAX-WS work. We encourage customers to move to this new programming model to take advantage of the new features as well as the significant performance gains. Many customers I know of have moved to the Feature Pack for Web Services already, but now that it's natively supported in WAS V7.0, I expect even more customers to make a move from JAX-RPC to JAX-WS.

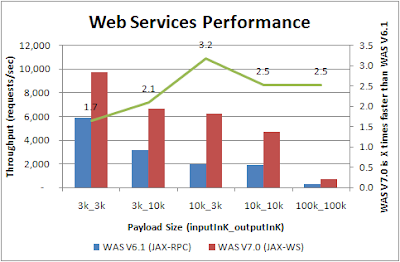

The chart below compares WAS V6.1 JAX-RPC performance to WAS V7.0 JAX-WS performance. JAX-WS web services significantly outperform JAX-RPC web services for all message payload sizes shown. For a 3k(input)/3k(output) message size, JAX-WS achieves a 70% higher throughput and for 10k/10k message size JAX-WS achieves over 2x higher performance. For 10k/3k, 10k/10k, 100k/100k JAX-WS achieves over 3x/2.5x/2.5x higher performance, respectively.

There are many reasons for these performance gains, but the most significant one is the optimized data binding using JAXB in JAX-WS. JAXB provides JAX-WS the framework to unmarshal XML documents into Java objects or marshal Java objects into XML. We have done very impressive optimizations in our JAXB implementation to achieve these performance gains, well beyond what most JAXB implementations can do with StAX or SAX. Getting high performance XML parsing combined with JAXB's full support for XML Schema is an impressive accomplishment.

Some things that matter to you specifically: This is a primitive benchmark as it only measures web services performance (no business logic). Therefore, your application using web services will not go 2-3 times faster just by switching to JAX-WS and JAXB, but it should markedly improve. Specifically the additional latency involved in exposing business logic to web services and XML should go down by 1/2-2/3, which in many scenarios is substantial. Also, even though you can handle XML yourself in JAX-WS by getting the data as a stream or DOM, it's best if you use JAXB as you'll get the most performance benefit of our optimizations.

I'm interested in feedback from users that have tried the new engine.

9 comments:

Hi Andrew,

WebSphere has two web services engines Axis and a native one ?. If my assumption is correct I would assume that the native engine is much faster than the Axis engine.

Chris

There are multiple parts to your question. Therefore, let me break it down.

You started of with asking about multiple web services runtimes.

First, WebSphere Application Server offers multiple programming models for Web Services: JAX-RPC and JAX-WS are the most well known, however, SAAJ can also be natively used, and we still have WSIF. We know that with these programming models, the majority of processing cost for web services is centered on marshalling/demarshalling the XML to and from Java artifacts.

JAX-RPC has been around for a while, and in WebSphere, we use a derivative of Apache Axis 1.0 (which we've taken and modified a long time ago - WAS 5.0.2). Its implementation is based on a SAX-based model and ties together the invocation model and the data binding (marshalling/demarshalling). The implemenation uses SAX (and the processing required for it uses does convert the data into char[] events, and then eventually JAX-RPC Java Beans).

JAX-WS, on the other hand, offers a couple of different aspects of usage for handling data binding. The typical support uses JAXB, which inherently is positioned to allow for stream-based processing. With the implementation of JAXB being able to support and utilize StAX, the processing is more efficient, and therefore, its performance is better than our previous SAX-based model (that's part of the optimizations that have taken place throughout the years).

JAX-WS also allows you to use a Provider (and get access to the Source directly). Therefore, it can also be processed w/other data binding technologies.

So – we have multiple implementations of a web services stack.

Second, the programming model is somewhat tied directly to an implementation by the fact that the programming model, to some extent, dictates how the implementation must work. For example, the fact that JAX-WS uses SAAJ for its handler model means that WebSphere has to be prepared to expose manipulation of SAAJ to its customers. There are performance costs associated with transforming to SAAJ (if the implementation doesn’t natively use SAAJ. Fortunately, WebSphere has had some experience with this approach and we try to do so as lazily as possible. While WebSphere doesn't support Apache Axis2 directly, Axis2 is an integral part of WebSphere’s core implementation for JAX-WS. Our goal was to provide the JCP-based APIs since they are standard which provides the level of stability our enterprise users desire (as well as complete support for XML schema mapping to Java).

Stepping back to your original question, and point about Axis2 and native stack, I am interpreting that to be about our JAX-RPC stack and our JAX-WS stack (where native is JAX-RPC-based implementation and Axis2 is our JAX-WS-based implementation. As such, no, the JAX-RPC stack is not faster (for the reasons defined above). The JAX-WS stack is faster.

Moving a bit forward, if you then wanted to compare our JAX-WS stack based on Axis2 with pure Axis2, the comparison really centers around 2 points.

a) JAX-WS and JAXB as a stable JCP-centric APIs vs. Axis2 and ADB

b) Implementation-based technology, which optimizes various paths through the code.

We chose JCP as stability and portability were important for our enterprise customers, and full XML data mapping with JAXB was important. However, we wanted to help build upon open source, in order to promote and extend that model moving forward.

Third, and this addresses point b), the optimizations that Andrew is referring to in v7.0 has introduced some tighter coupling with JAXB and the parser in our implementation. This integration has shown some significant improvements (and all of that comes at the benefit of our JAX-WS users - as well as native JAXB users). They go beyond the StAX level (so, from that perspective, it is native to our WebSphere implementation). Hope that helps explain a bit about our runtime and rationale.

Chris, as Greg pointed out, our heritage is to build upon and support open source (which drives standards and ubiquity) while adding performance and enterprise qualities of service and management "value add" and therefore our native web services engine (that is based upon open source) is faster than running the open source engine itself.

what is the system under load? 10 000 request seems nice.

Orjan,

The hardware was:

Intel Xeon MP 4 x 3.0 GHz with 2MB L2 cache per core (16x with HT Enabled).

I'd note a few things.

Regardless of hardware, we'd see similar improvements (well sorta). We worked on SMP scalability in this release (across all parts of the application server) and, therefore, you'll see more of an improvement on higher SMP systems.

Also, as I noted, this is a "primitive benchmark" so you won't get 10,000 req/sec on workloads that have business logic behind the web service invocation. Of course, its good to know what the maximum throughput is out of web services, which as you can see is very impressive! Additional latency of web services shouldn't be a concern to most customer scenarios that use sufficiently coarse grained service interactions.

Guys,

Thanks for the information by the way.

Regards,

Chris

Greg/Andrew: Is the benchmark available for us to download and run?

Sanjiva.

Sanjiva,

The results were only illustrative to show how WAS customers will benefit moving from older WAS releases to WAS V7. I would gladly work with any customer on their application to see what similar performance advances they achieve in their application. The web service workload noted in the post is really an internal workload to help guide our performance and development teams. Our public benchmarking efforts are aligned with standards based benchmarking organizations like SPEC and TPC as you see here (http://webspherecommunity.blogspot.com/2008/07/tpc-is-working-on-soa-benchmark.html). We would encourage all vendors to join the TPC and IBM in this effort to create a standard public benchmark for Web Services and SOA.

Hi Andrew,

I have some question regarding the part "Also, even though you can handle XML yourself in JAX-WS by getting the data as a stream or DOM, it's best if you use JAXB as you'll get the most performance benefit of our optimizations."

We are testing our product on WAS7 which is the target web application server for our next release.

I am experiencing a strange problem when I try to change my JAX-WS endpoint implementation from normal service implementation interface to JAX-WS provider API.

I thought that getting down to the level of raw req/resp will gave me the better performance figure since there are no JAXB marshalling involved but the informal testing showed that the Provider API is even slower than the old implementation which uses SEI and JAX-B binding.

Do you have any information/idea regarding how Provider API may be slower than SEI+JAXB?

Thanks very much.

Post a Comment